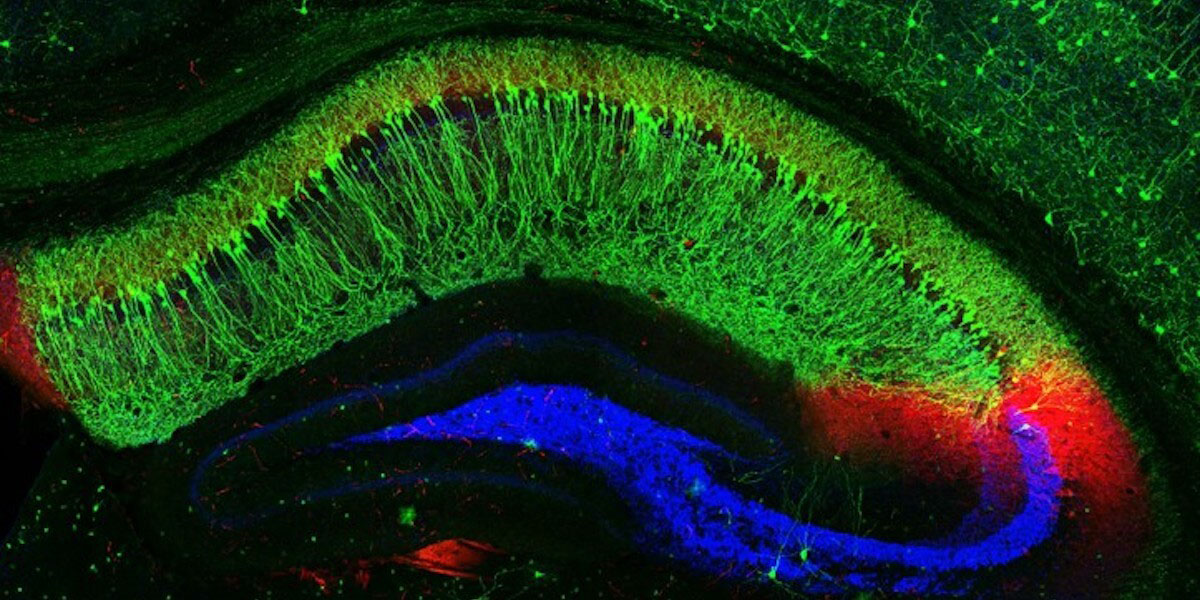

We develop biomimetic devices and next-generation neural interfaces to understand brain functions and build cortical prostheses. Our research focuses on investigating cognitive processes during naturalistic behaviors, particularly in regions like the hippocampus, to advance treatments for neurological disorders.

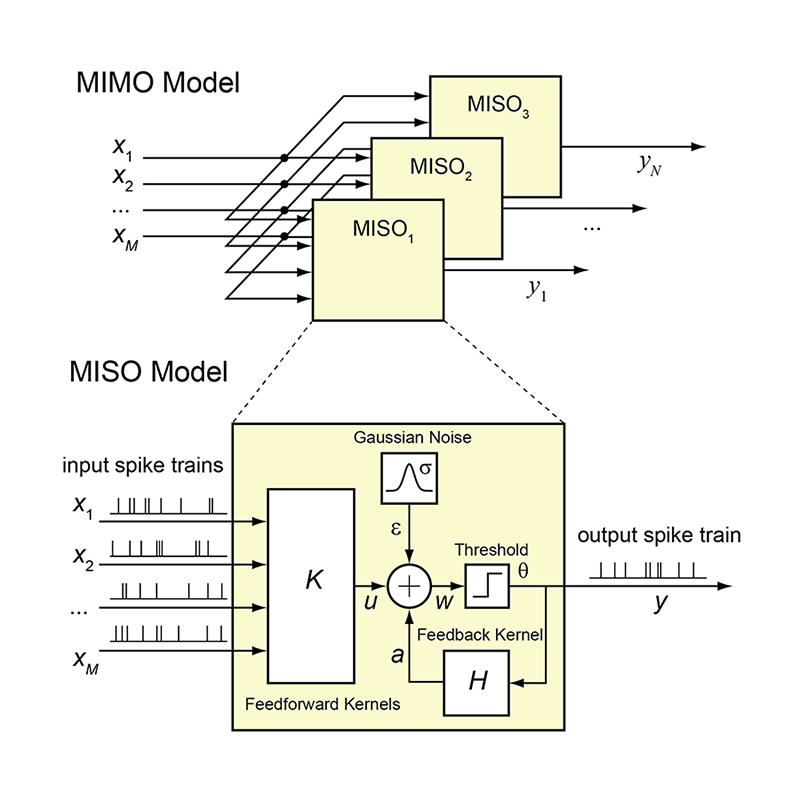

The overarching goal of our research is to build biomimetic devices that can be used to treat neurological disorders. Specifically, we develop next-generation modeling and neural interface methodologies to investigate brain functions during naturalistic behaviors in order to understand how brain regions such as the hippocampus perform cognitive functions, and build cortical prostheses that can restore and enhance cognitive functions lost in diseases or injuries.

We synergistically combine mechanistic and input-output modeling approaches to build computational models to investigate the underlying mechanisms of learning and memory, and develop hippocampal prostheses to restore and enhance memory functions lost in diseases or injuries.

Discover More Projects

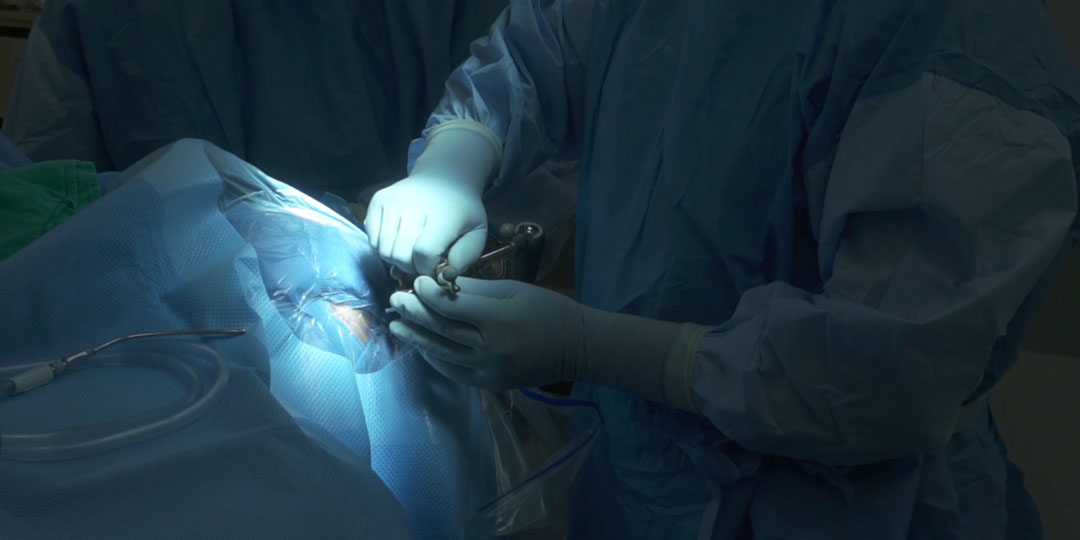

We develop invasive and noninvasive neural interface technologies for chronic, wireless, multi-region, large-scale recording and stimulation of the nervous system in untethered animals to study neural functions during naturalistic behaviors and develop implantable biomimetic neural prostheses.

Discover More ProjectsMeet the interdisciplinary researchers driving innovation at the Signal Analysis and Interpretation Laboratory.

Associate Professor of Neurological Surgery and Biomedical Engineering

Principle Investigator

Biomedical Engineering

Postdoctoral Researcher

Electronic Information and Communications

Postdoctoral Researchers

Biochemistry and Philosophy

Ph.D. Students

Senior Research Associate

Behavioral Signal Processing

Research Scientist

Emotion Recognition

Lead Research Engineer

Speech Processing

Research Associate working on computer vision and machine learning approaches for facial expression analysis and audiovisual speech processing.

Research Associate

Computer Vision

PhD candidate researching deep learning approaches for multimodal emotion recognition in human-computer interaction contexts.

PhD Candidate

Multimodal Deep Learning

PhD student focusing on natural language processing for mental health applications, developing models to analyze linguistic patterns in clinical contexts.

PhD Student

NLP for Healthcare

PhD candidate working on speech signal processing for children with developmental disorders, developing automated assessment tools.

PhD Candidate

Speech Signal Processing

PhD student researching computer vision approaches for human behavior analysis, with applications in healthcare and assistive technologies.

PhD Student

Computer Vision

Our latest research contributions to the scientific community.

Authors: S. Narayanan, D. Bone, E. Mower Provost, M. Chen, S. Rodriguez

Published in: IEEE Transactions on Affective Computing, 2024

This paper presents a novel deep learning framework for integrating multimodal behavioral signals (speech, language, facial expressions) to assess mental health conditions, demonstrating significant improvements over unimodal approaches.

Authors: V. Martinez, S. Taylor, J. Wilson, A. Patel, S. Narayanan

Published in: Proceedings of INTERSPEECH, 2024

This work introduces a novel self-supervised learning approach that leverages large amounts of unlabeled speech data to improve emotion recognition performance when labeled data is scarce.

Authors: M. Chen, S. Rodriguez, C.-C. Jay Kuo, M. Matarić, S. Narayanan

Published in: IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023

This paper presents a novel transformer-based architecture for fusing multimodal information in human behavior analysis tasks, demonstrating state-of-the-art performance on several benchmark datasets.

Stay updated with the latest developments from our lab.

Our lab has received a $2.5M grant from the NIH to develop next-generation neural interfaces for memory restoration.

Read More

Our team's work on self-supervised learning for neural data analysis received the Best Paper Award at ICML 2025.

Read More

Two of our PhD students have been recognized with USC's Outstanding Innovation Awards for their work in neural prosthetics.

Read MoreOur research has been featured in leading academic and media platforms.

Interested in collaborating or learning more about our research? Get in touch with us.

Signal Analysis and Interpretation Laboratory

University of Southern California

3740 McClintock Avenue, EEB 400

Los Angeles, CA 90089-2564

slab@usc.edu

+1 (213) 740-3477